Implicit Interaction

(SSF, Brown & Höök)

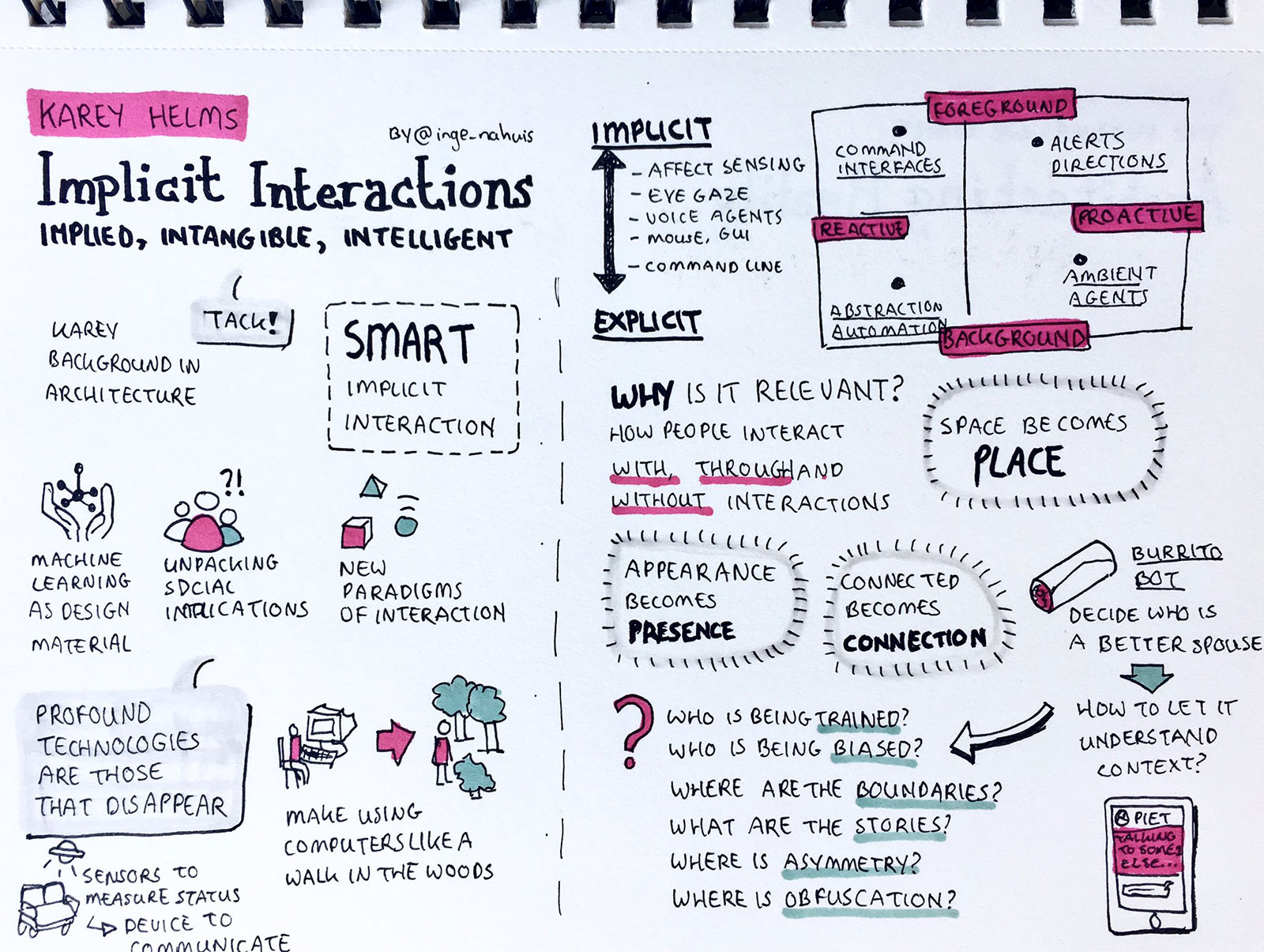

This project is a joint collaboration between Stockholm University, KTH and RISE. The project is built around developing a new interface paradigm that we call smart implicit interaction. Implicit interactions stay in the background, thriving on data analysis of speech, movements, and other contextual data, avoiding unnecessarily disturbing us or grabbing our attention.

When we turn to them, depending on context and functionality, they either shift into an explicit interaction – engaging us in a classical interaction dialogue (but starting from analysis of the context at hand) – or they continue to engage us implicitly using entirely different modalities that do not require an explicit dialogue – that is through the ways we move or engage in other tasks, the smart objects respond to us. During the first half of the project, we have created a range of applications for the home, body, outdoors and intimate healthcare. We have also worked with autonomous systems: creating the Tama robot that wakes up through eye-gaze interaction; and putting custom-built drones on stage in an opera performance.

Based on these explorations, we have put together a Soma Design toolkit, enabling early idea formations for these settings. The toolkit integrates heat, vibration, gaze interaction, shape-changing materials, biosensor input, all integrated with the help of interactive machine learning, feeding off sparse data streams. We have created the beginnings of multimodal data machine learning to help shift the models beyond language and speech, towards more complex models of human expression. Together, our design experiments and technological inventions, has begun to reveal how implicit interactions can be shaped to make sense as part of our everyday life environments.

Academically, the project has produced one book, three journal papers and ~20 papers in highly renowned peer reviewed conferences.